-

GPU

GPU

MetaY is a groundbreaking app that empowers users to contribute their unused GPU resources for AI deep learning inference tasks. By leveraging the excess capacity of your GPU, MetaY enables you to earn rewards while supporting cutting-edge AI research and development. Simple to set up and run, MetaY ensures you make the most out of your GPU without compromising your own usage.18 Aug 2024Readmore

MetaY is a groundbreaking app that empowers users to contribute their unused GPU resources for AI deep learning inference tasks. By leveraging the excess capacity of your GPU, MetaY enables you to earn rewards while supporting cutting-edge AI research and development. Simple to set up and run, MetaY ensures you make the most out of your GPU without compromising your own usage.18 Aug 2024Readmore -

Machine learning

Machine learning

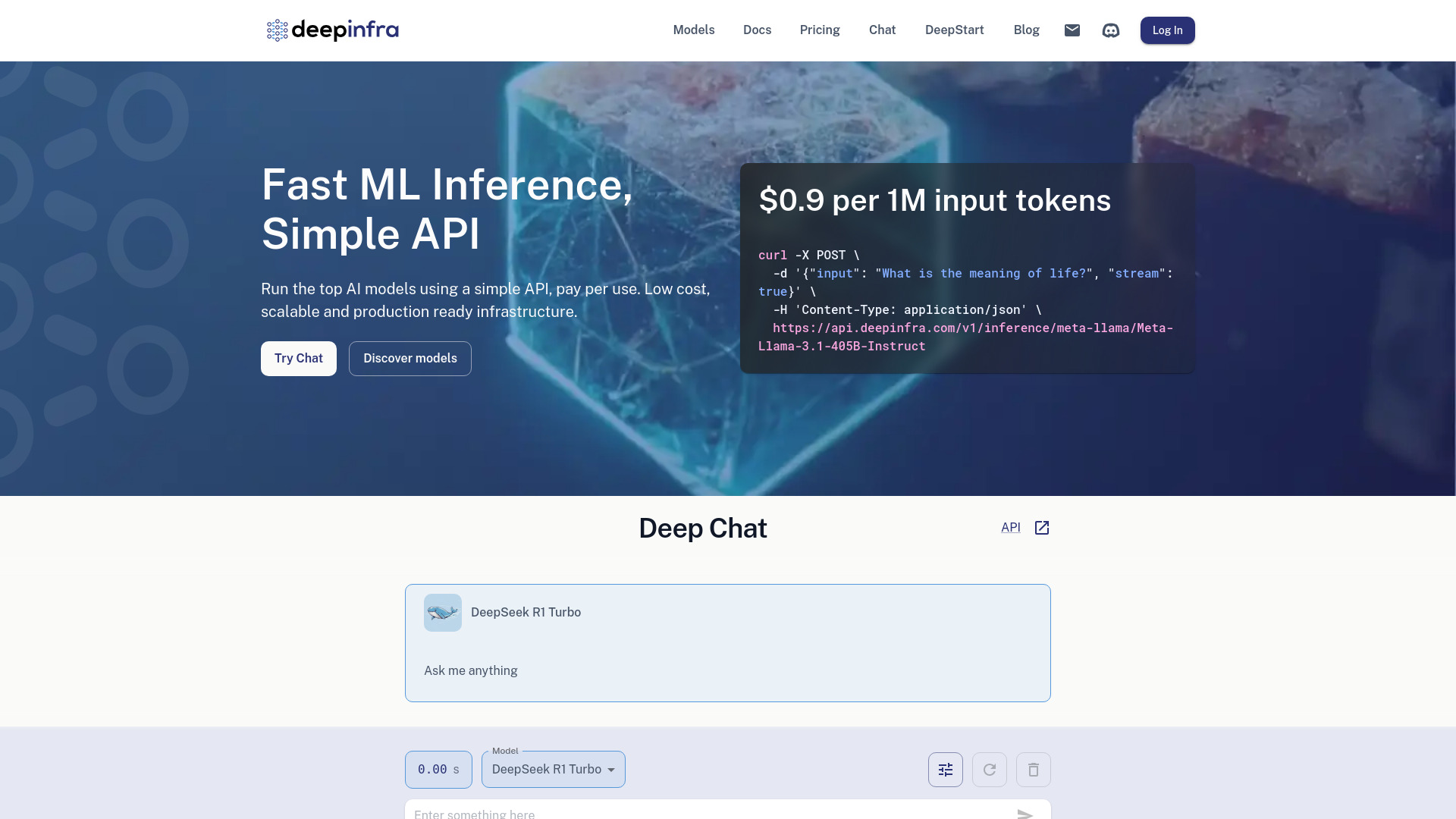

Deep Infra offers cost-effective, scalable, easy-to-deploy, and production-ready machine-learning models and infrastructures for deep-learning models. It provides a platform to run top AI models using a simple API, with pay-per-use pricing and low-latency inference. Users can deploy custom LLMs on dedicated GPUs and access various models for text generation, text-to-speech, text-to-image, and automatic speech recognition.07 Apr 2025Readmore

Deep Infra offers cost-effective, scalable, easy-to-deploy, and production-ready machine-learning models and infrastructures for deep-learning models. It provides a platform to run top AI models using a simple API, with pay-per-use pricing and low-latency inference. Users can deploy custom LLMs on dedicated GPUs and access various models for text generation, text-to-speech, text-to-image, and automatic speech recognition.07 Apr 2025Readmore -

AI

AI

Prem is an applied AI research lab dedicated to building a future where everyone can access sovereign, private, and personalized AI. They focus on creating secure, personalized AI models, offering products for enterprises and consumers. Their core products include an Autonomous Finetuning Agent and Encrypted Inference using TrustML™ to protect sensitive data. Prem also advances AI through transparent, secure, and explainable reasoning with Specialized Reasoning Models (SRM). They offer open-source models like Prem-1B-SQL and Prem-1B Series for Retrieval-Augmented Generation (RAG).21 May 2024Readmore

Prem is an applied AI research lab dedicated to building a future where everyone can access sovereign, private, and personalized AI. They focus on creating secure, personalized AI models, offering products for enterprises and consumers. Their core products include an Autonomous Finetuning Agent and Encrypted Inference using TrustML™ to protect sensitive data. Prem also advances AI through transparent, secure, and explainable reasoning with Specialized Reasoning Models (SRM). They offer open-source models like Prem-1B-SQL and Prem-1B Series for Retrieval-Augmented Generation (RAG).21 May 2024Readmore -

LLMs

LLMs

OpenRouter is the unified interface for LLMs. It provides better prices, better uptime, and no subscription. It allows access to all major models through a single, unified interface, and the OpenAI SDK works out of the box. OpenRouter offers higher availability through its distributed infrastructure, falling back to other providers when one goes down. It also provides price and performance optimization, running at the edge and adding just ~25ms between users and their inference. Custom data policies are available to protect organizations with fine-grained data policies, ensuring prompts only go to trusted models and providers.23 Aug 2024Readmore

OpenRouter is the unified interface for LLMs. It provides better prices, better uptime, and no subscription. It allows access to all major models through a single, unified interface, and the OpenAI SDK works out of the box. OpenRouter offers higher availability through its distributed infrastructure, falling back to other providers when one goes down. It also provides price and performance optimization, running at the edge and adding just ~25ms between users and their inference. Custom data policies are available to protect organizations with fine-grained data policies, ensuring prompts only go to trusted models and providers.23 Aug 2024Readmore -

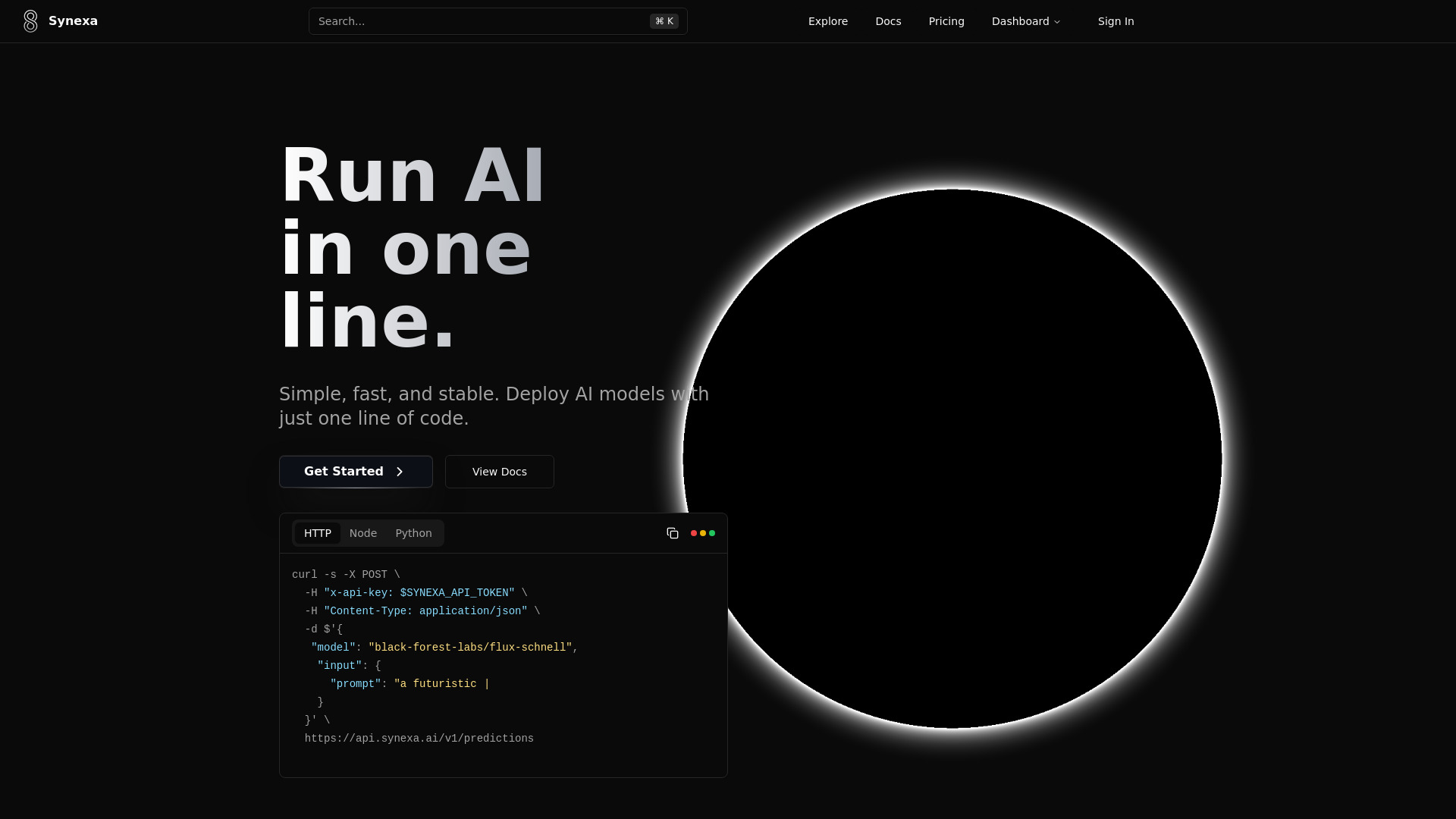

AI deployment

AI deployment

Synexa AI is an AI deployment and infrastructure platform that allows users to run powerful AI models instantly with just one line of code. It is designed to be fast, stable, and developer-friendly, offering cost-effective GPU pricing, automatic scaling, and a world-class developer experience. Synexa provides access to an extensive collection of over 100 production-ready AI models and boasts a blazing fast inference engine.31 Jan 2025Readmore

Synexa AI is an AI deployment and infrastructure platform that allows users to run powerful AI models instantly with just one line of code. It is designed to be fast, stable, and developer-friendly, offering cost-effective GPU pricing, automatic scaling, and a world-class developer experience. Synexa provides access to an extensive collection of over 100 production-ready AI models and boasts a blazing fast inference engine.31 Jan 2025Readmore -

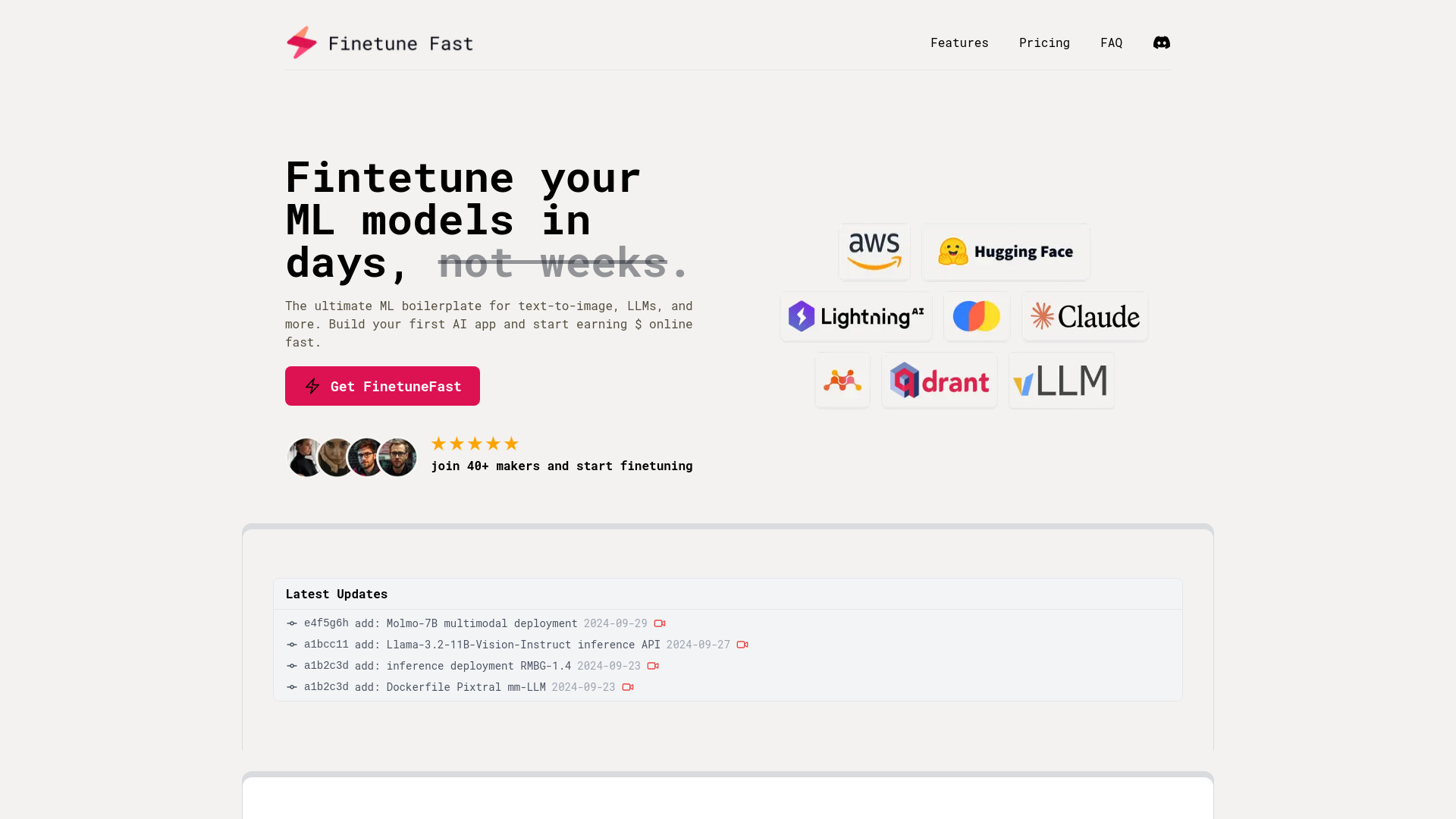

ML Model Boilerplate

ML Model Boilerplate

FinetuneFast is an ML model boilerplate designed to help users quickly finetune and deploy AI models into production. It aims to reduce the time and complexity associated with setting up model training, preparing data, handling API integration, evaluating models, deploying models, and scaling infrastructure. FinetuneFast supports various models, including text-to-image models and LLMs, and offers pre-configured training scripts, efficient data loading pipelines, and one-click model deployment.10 Oct 2024Readmore

FinetuneFast is an ML model boilerplate designed to help users quickly finetune and deploy AI models into production. It aims to reduce the time and complexity associated with setting up model training, preparing data, handling API integration, evaluating models, deploying models, and scaling infrastructure. FinetuneFast supports various models, including text-to-image models and LLMs, and offers pre-configured training scripts, efficient data loading pipelines, and one-click model deployment.10 Oct 2024Readmore -

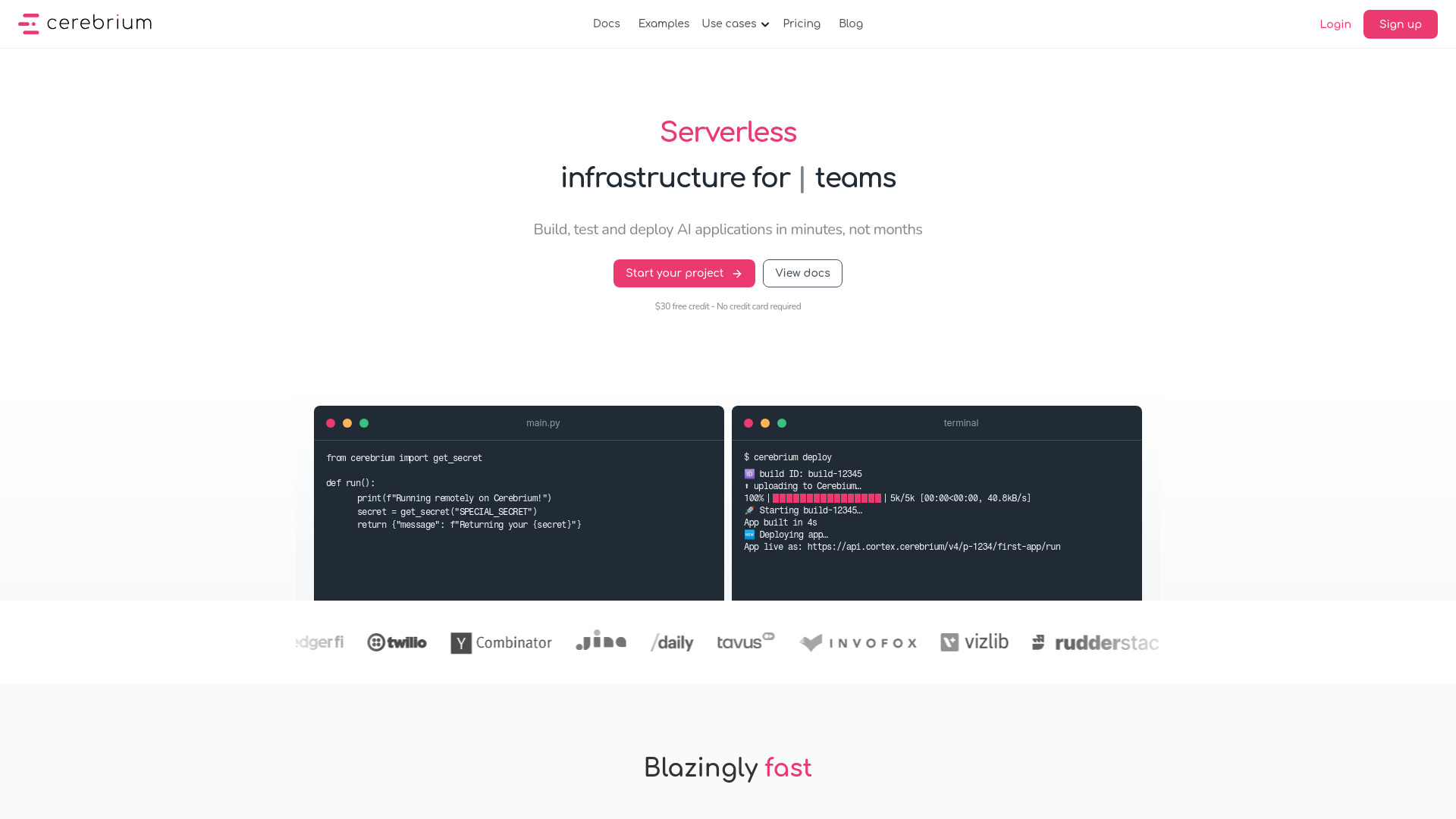

Serverless

Serverless

Cerebrium is a serverless AI infrastructure platform that simplifies the process of building, deploying, and scaling AI applications. It offers a variety of GPUs, large-scale batch job execution, and real-time voice application capabilities. Cerebrium aims to provide a cost-effective alternative to AWS and GCP, with customers experiencing over 40% cost savings. It focuses on optimizing the pipeline for fast cold starts and ensures system reliability with 99.999% uptime, SOC 2 & HIPAA compliance, and comprehensive observability tools.07 Jan 2025Readmore

Cerebrium is a serverless AI infrastructure platform that simplifies the process of building, deploying, and scaling AI applications. It offers a variety of GPUs, large-scale batch job execution, and real-time voice application capabilities. Cerebrium aims to provide a cost-effective alternative to AWS and GCP, with customers experiencing over 40% cost savings. It focuses on optimizing the pipeline for fast cold starts and ensures system reliability with 99.999% uptime, SOC 2 & HIPAA compliance, and comprehensive observability tools.07 Jan 2025Readmore