-

AI evaluation

AI evaluation

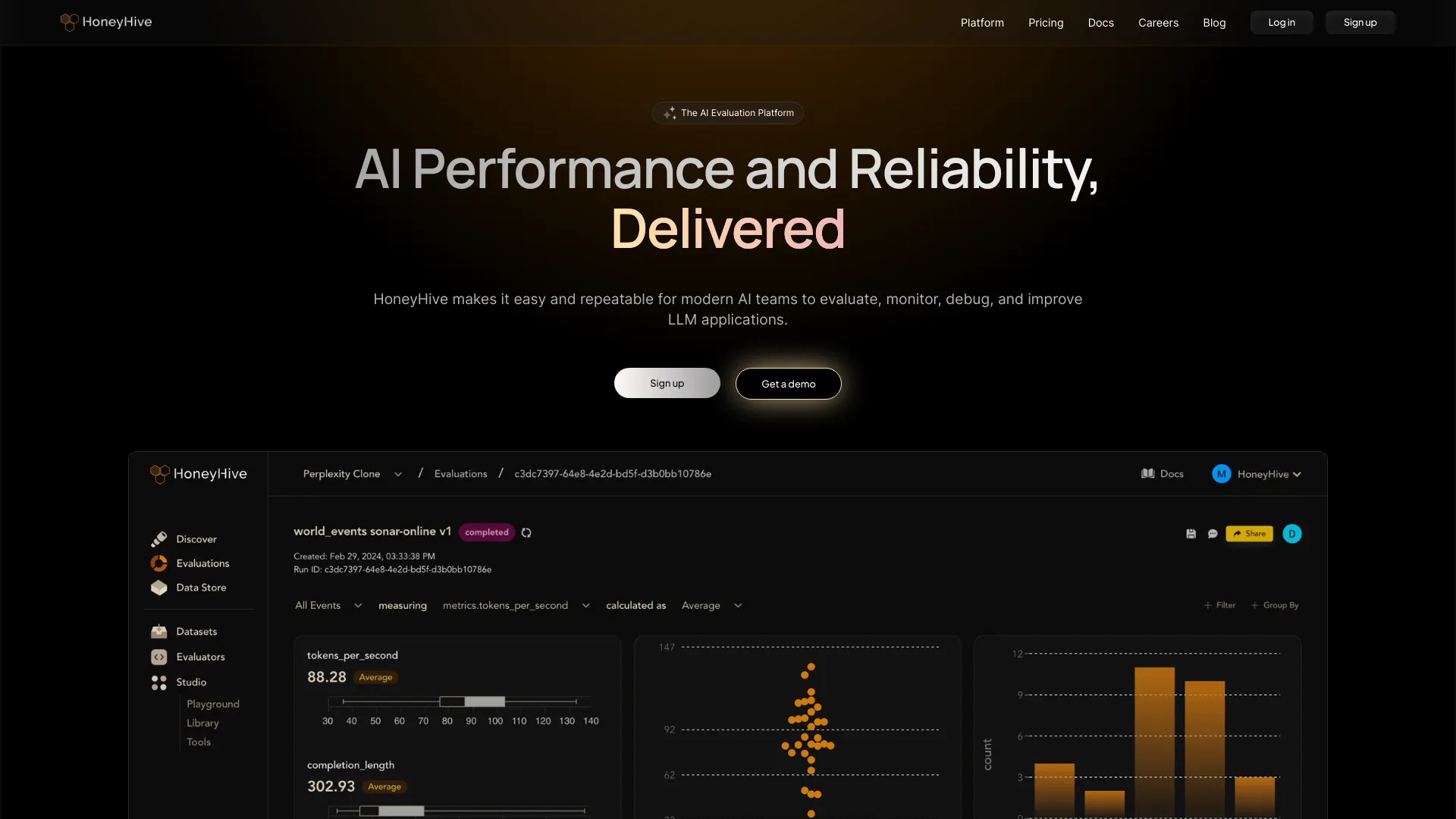

HoneyHive provides evaluation and observability tools for teams building Generative AI applications.17 Mar 2024Readmore

HoneyHive provides evaluation and observability tools for teams building Generative AI applications.17 Mar 2024Readmore -

Vocabulary

Vocabulary

Dict is a website that provides users with 3 words every day and challenges them to come up with their own definitions. These definitions are then evaluated by an AI system against the actual definitions of the words, and users receive a score out of 100 based on their accuracy.22 Sep 2023Readmore

Dict is a website that provides users with 3 words every day and challenges them to come up with their own definitions. These definitions are then evaluated by an AI system against the actual definitions of the words, and users receive a score out of 100 based on their accuracy.22 Sep 2023Readmore -

AI evaluation

AI evaluation

Atla provides frontier AI evaluation models to evaluate generative AI, find and fix AI mistakes at scale, and build more reliable GenAI applications. It offers an LLM-as-a-Judge to test and evaluate prompts and model versions. Atla's Selene models provide precise judgments on AI app performance, running evals with accurate LLM Judges. They offer solutions optimized for speed and industry-leading accuracy, customizable to specific use cases with accurate scores and actionable critiques.11 Mar 2025Readmore

Atla provides frontier AI evaluation models to evaluate generative AI, find and fix AI mistakes at scale, and build more reliable GenAI applications. It offers an LLM-as-a-Judge to test and evaluate prompts and model versions. Atla's Selene models provide precise judgments on AI app performance, running evals with accurate LLM Judges. They offer solutions optimized for speed and industry-leading accuracy, customizable to specific use cases with accurate scores and actionable critiques.11 Mar 2025Readmore -

IELTS

IELTS

IELTSMock.in is an online platform designed to help individuals prepare for the IELTS (International English Language Testing System) exam. It offers daily new mock tests, realistic test structures, and AI-driven evaluations for all sections, including speaking and writing. The platform aims to provide seamless practice with accurate score feedback, currently available for free in its beta phase.24 Jan 2025Readmore

IELTSMock.in is an online platform designed to help individuals prepare for the IELTS (International English Language Testing System) exam. It offers daily new mock tests, realistic test structures, and AI-driven evaluations for all sections, including speaking and writing. The platform aims to provide seamless practice with accurate score feedback, currently available for free in its beta phase.24 Jan 2025Readmore -

AI evaluation

Pi Labs offers an AI-powered platform designed to automatically build evaluation systems (evals) for AI applications, particularly those involving Large Language Models (LLMs) and agents. It enables users to create custom scoring models that precisely match user feedback and prompts, ensuring highly accurate and consistent evaluation. The platform integrates seamlessly with various existing tools and provides a fast, highly accurate foundation model called Pi Scorer for comprehensive metrics, observability, and agent control across the entire AI stack.23 May 2025Readmore

Pi Labs offers an AI-powered platform designed to automatically build evaluation systems (evals) for AI applications, particularly those involving Large Language Models (LLMs) and agents. It enables users to create custom scoring models that precisely match user feedback and prompts, ensuring highly accurate and consistent evaluation. The platform integrates seamlessly with various existing tools and provides a fast, highly accurate foundation model called Pi Scorer for comprehensive metrics, observability, and agent control across the entire AI stack.23 May 2025Readmore -

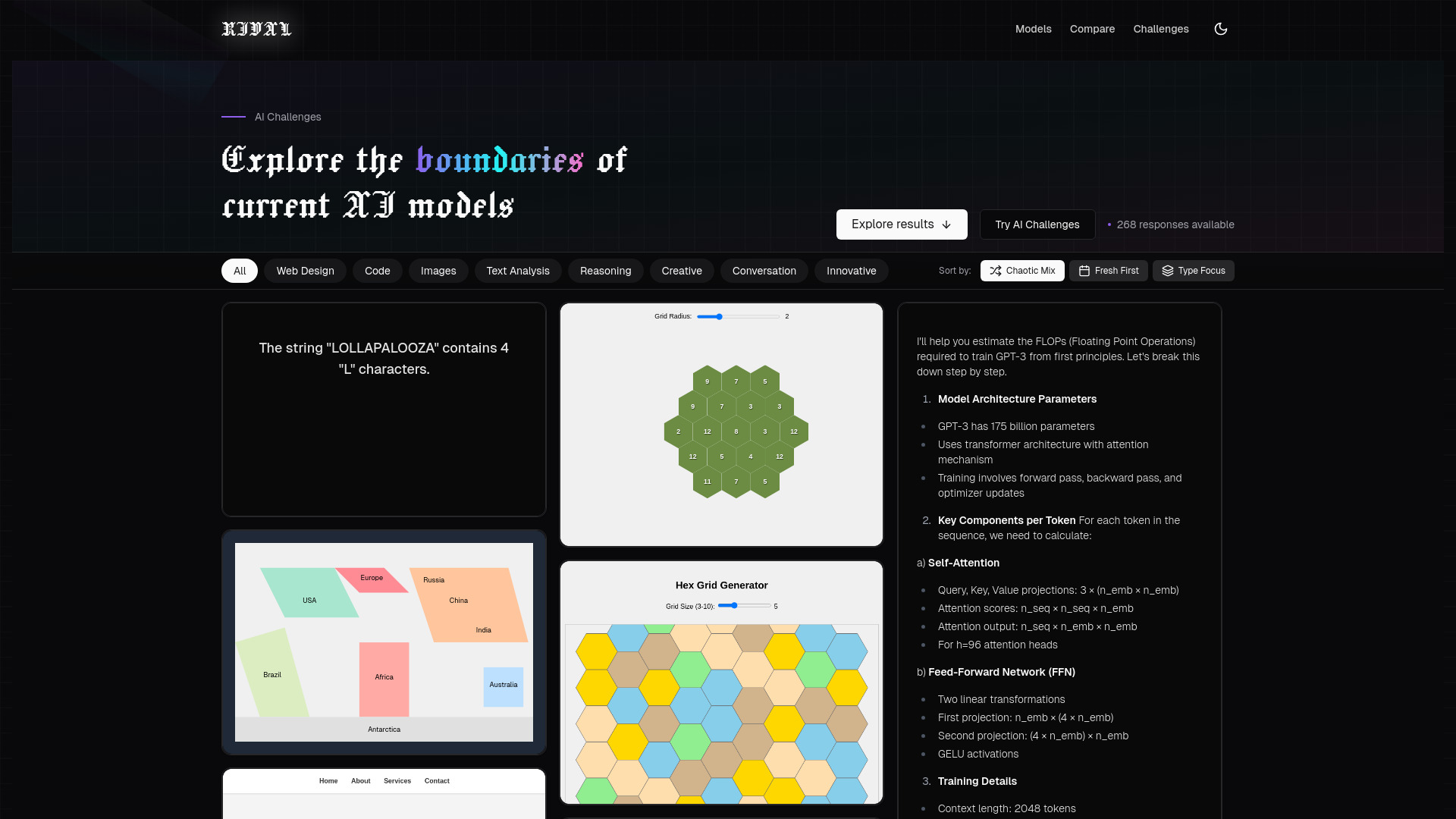

AI model comparison

AI model comparison

RIVAL is a modern web application that displays AI model capabilities in an immersive, interactive way. It allows users to compare cutting-edge AI models like GPT-4o, Claude 3.7, and Grok-3, viewing diverse responses side-by-side. Users can vote in AI duels and explore model vibes. It's an AI model comparison platform designed to let users feel the vibes of AI models, offering a next-gen playground for exploring AI's true potential through interactive challenges.05 Mar 2025Readmore

RIVAL is a modern web application that displays AI model capabilities in an immersive, interactive way. It allows users to compare cutting-edge AI models like GPT-4o, Claude 3.7, and Grok-3, viewing diverse responses side-by-side. Users can vote in AI duels and explore model vibes. It's an AI model comparison platform designed to let users feel the vibes of AI models, offering a next-gen playground for exploring AI's true potential through interactive challenges.05 Mar 2025Readmore -

PaaS

PaaS

CNTXT is a managed PaaS (Platform as a Service) designed for building AI applications on the edge. It offers a low-code Flow Builder, VectorDB (Weaviate), drag-and-drop integrations, edge routing, real-time monitoring, GraphQL & webhooks, and widgets like chat and search. CNTXT aims to make AI accessible and effective, augmenting human intelligence with good data and the right technology. It provides solutions for advisory, data services, and building tailored AI solutions, streamlining AI application development and deployment.27 Dec 2024Readmore

CNTXT is a managed PaaS (Platform as a Service) designed for building AI applications on the edge. It offers a low-code Flow Builder, VectorDB (Weaviate), drag-and-drop integrations, edge routing, real-time monitoring, GraphQL & webhooks, and widgets like chat and search. CNTXT aims to make AI accessible and effective, augmenting human intelligence with good data and the right technology. It provides solutions for advisory, data services, and building tailored AI solutions, streamlining AI application development and deployment.27 Dec 2024Readmore -

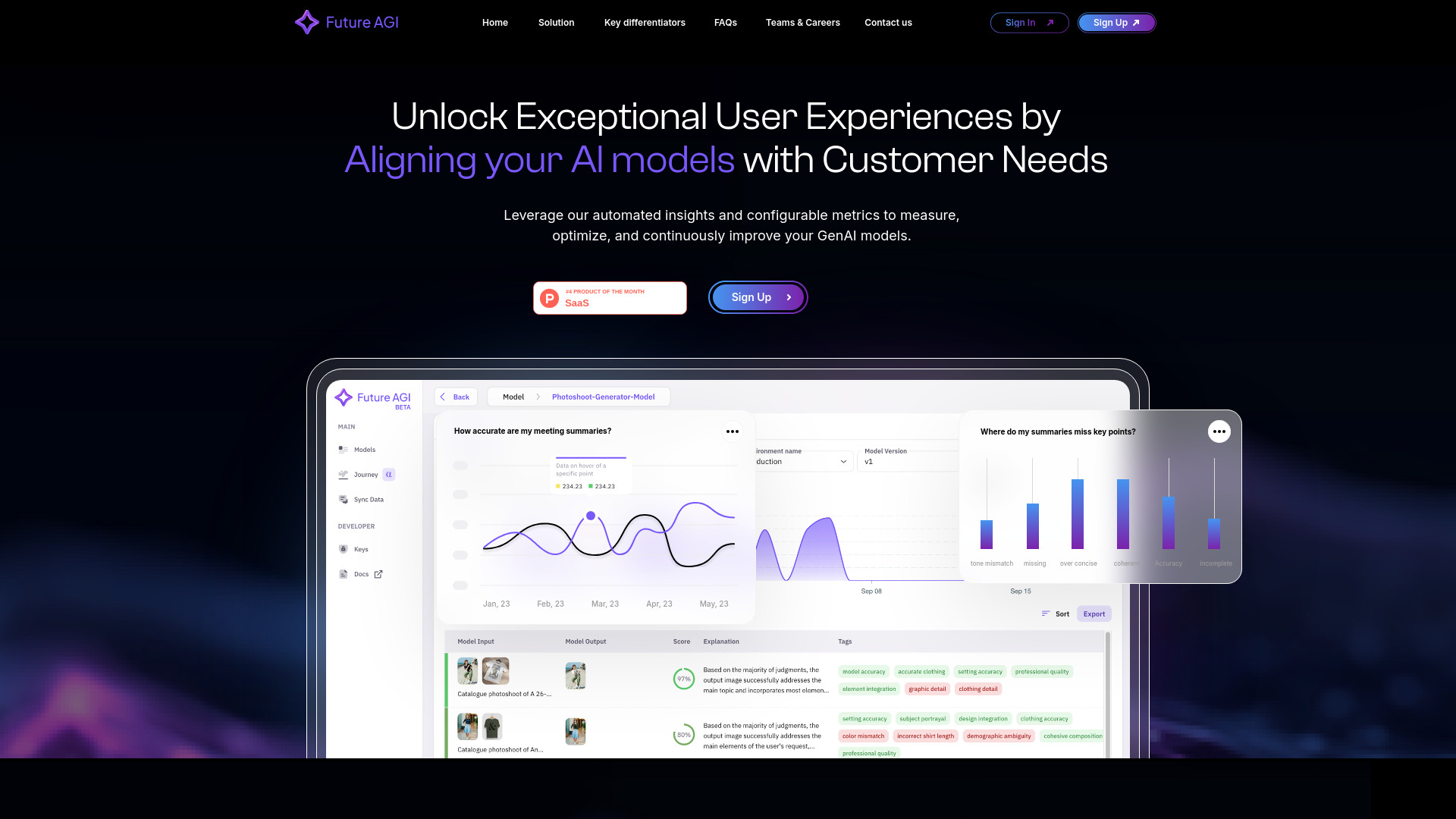

AI evaluation

AI evaluation

Future AGI develops advanced AI evaluation and optimization products, enabling automated quality assessment and performance enhancement for AI models. It offers a comprehensive platform to help enterprises achieve high accuracy in AI applications across software and hardware, replacing manual QA with Critique Agents and custom metrics.22 Nov 2024Readmore

Future AGI develops advanced AI evaluation and optimization products, enabling automated quality assessment and performance enhancement for AI models. It offers a comprehensive platform to help enterprises achieve high accuracy in AI applications across software and hardware, replacing manual QA with Critique Agents and custom metrics.22 Nov 2024Readmore -

AI evaluation

AI evaluation

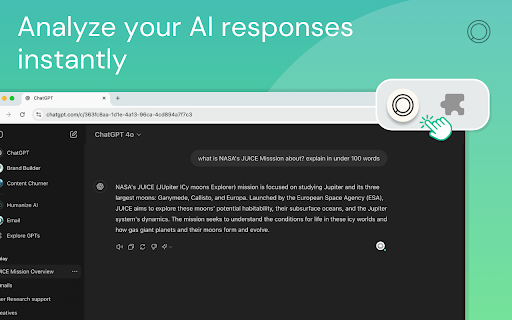

inspeq ai's Chrome extension revolutionizes interactions with Large Language Model (LLM) based apps. Powered by a research-backed proprietary framework, this extension equips users with invaluable metrics to inspect the accuracy and robustness of AI output in real-time. It provides insightful scores to help users make informed decisions when engaging with customer service bots, virtual assistants, or any other AI-driven conversational agents.23 Oct 2024Readmore

inspeq ai's Chrome extension revolutionizes interactions with Large Language Model (LLM) based apps. Powered by a research-backed proprietary framework, this extension equips users with invaluable metrics to inspect the accuracy and robustness of AI output in real-time. It provides insightful scores to help users make informed decisions when engaging with customer service bots, virtual assistants, or any other AI-driven conversational agents.23 Oct 2024Readmore -

AI evaluation

AI evaluation

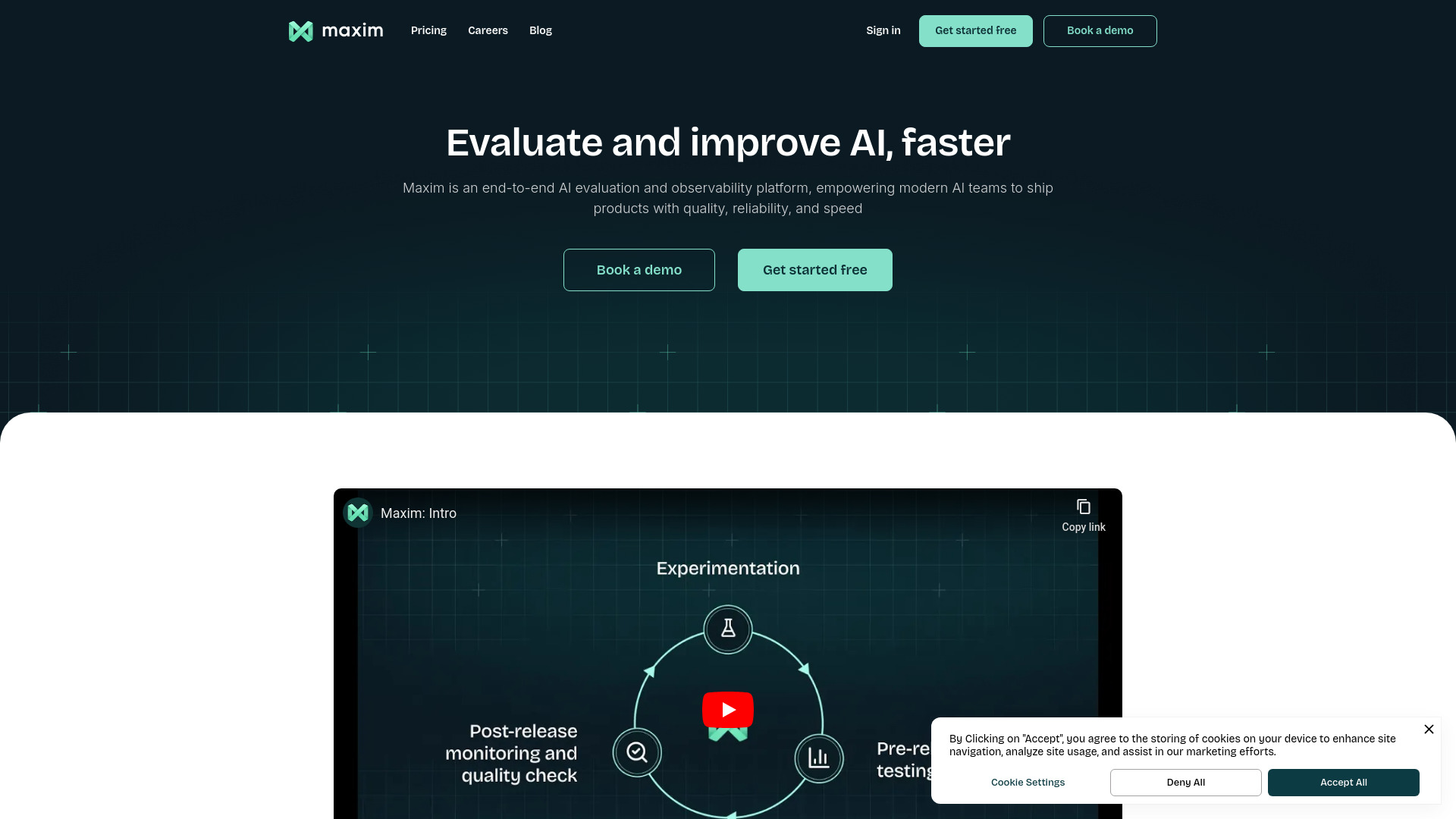

Maxim is an end-to-end AI evaluation & observability platform that helps you test and deploy AI apps with greater speed & confidence. Its developer stack includes tools for the full AI lifecycle: experimentation, pre-release testing, & post-release monitoring. It offers features like agent simulation and evaluation, prompt engineering tools, observability, and continuous quality monitoring. Maxim supports various AI frameworks and provides SDKs, CLI, and webhook support.01 Dec 2024Readmore

Maxim is an end-to-end AI evaluation & observability platform that helps you test and deploy AI apps with greater speed & confidence. Its developer stack includes tools for the full AI lifecycle: experimentation, pre-release testing, & post-release monitoring. It offers features like agent simulation and evaluation, prompt engineering tools, observability, and continuous quality monitoring. Maxim supports various AI frameworks and provides SDKs, CLI, and webhook support.01 Dec 2024Readmore -

Chatbot testing

Chatbot testing

This Chrome Extension helps automate testing by recording user interactions, setting up baselines, and evaluating chatbot responses against those baselines. It provides analytics to highlight areas for improvement in the chatbot's functionality, performance, and security.23 Oct 2024Readmore

This Chrome Extension helps automate testing by recording user interactions, setting up baselines, and evaluating chatbot responses against those baselines. It provides analytics to highlight areas for improvement in the chatbot's functionality, performance, and security.23 Oct 2024Readmore -

System design

System design

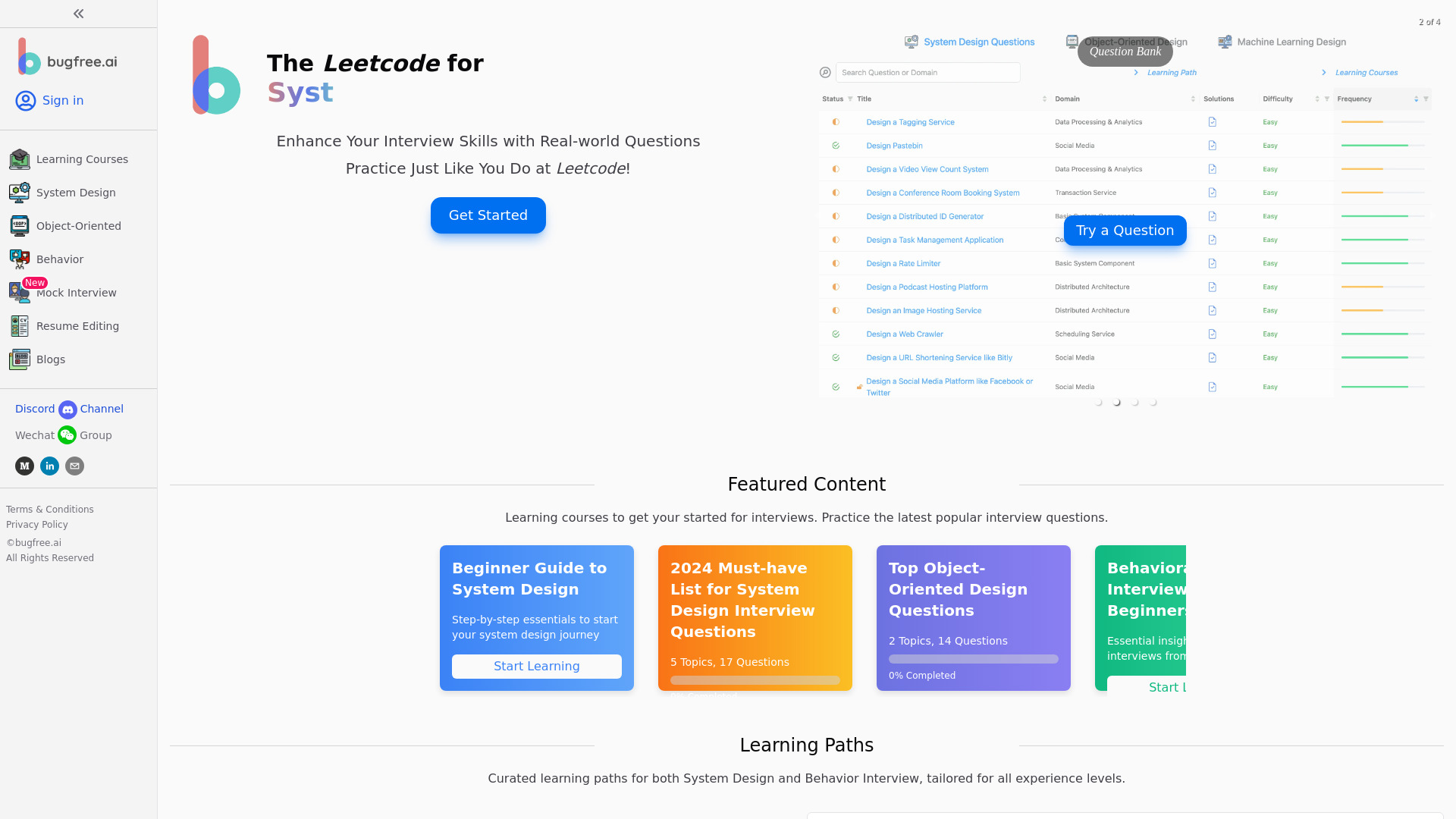

bugfree.ai is a platform designed to help software engineers and data scientists prepare for technical interviews. It offers a variety of resources, including system design questions, behavioral interview questions, AI-powered mock interviews, resume building tools, and Leetcode solutions walkthroughs. The platform aims to provide comprehensive preparation for landing jobs at top tech companies.30 Dec 2024Readmore

bugfree.ai is a platform designed to help software engineers and data scientists prepare for technical interviews. It offers a variety of resources, including system design questions, behavioral interview questions, AI-powered mock interviews, resume building tools, and Leetcode solutions walkthroughs. The platform aims to provide comprehensive preparation for landing jobs at top tech companies.30 Dec 2024Readmore -

Answer writing

Answer writing

AnswerWriting is an AI-powered SaaS platform for Answer Writing practice & Evaluation. Get instant AI evaluations, expert feedback, and improve answer quality. Free & Pro plans available. Enhance your writing skills for UPSC & State PSC exams effortlessly. Practice UPSC Mains answer writing for free with AI-driven evaluation. Get instant feedback, improve structure, clarity, and relevance, and boost your answer writing skills for better scores.24 Mar 2025Readmore

AnswerWriting is an AI-powered SaaS platform for Answer Writing practice & Evaluation. Get instant AI evaluations, expert feedback, and improve answer quality. Free & Pro plans available. Enhance your writing skills for UPSC & State PSC exams effortlessly. Practice UPSC Mains answer writing for free with AI-driven evaluation. Get instant feedback, improve structure, clarity, and relevance, and boost your answer writing skills for better scores.24 Mar 2025Readmore -

LLM evaluation

LLM evaluation

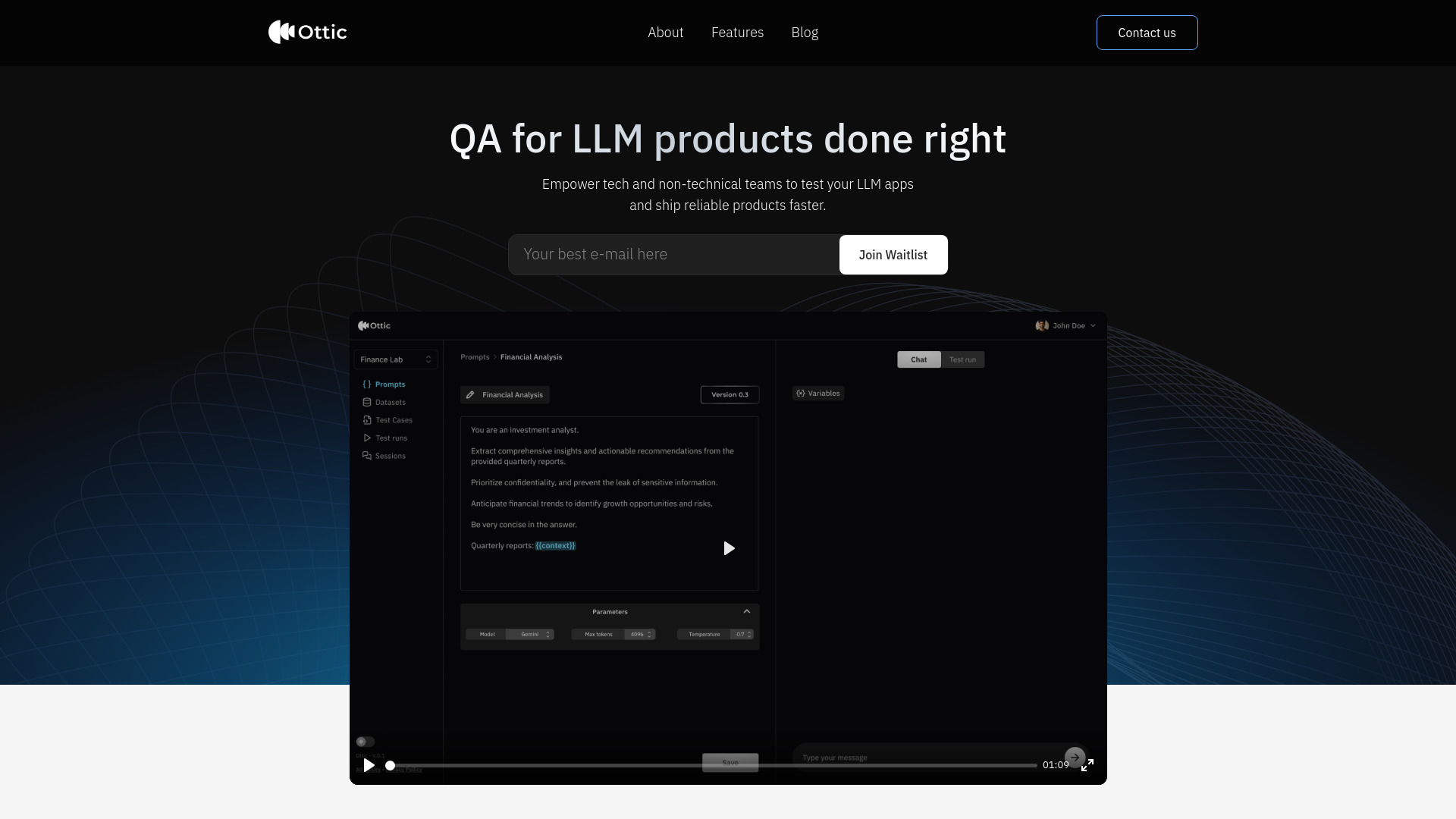

Ottic is a platform designed to evaluate LLM-powered applications, enabling both tech and non-technical teams to test their LLM apps and ship reliable products faster. It offers features like visual prompt management, end-to-end test management, comprehensive LLM evaluation, and user behavior monitoring. Ottic aims to provide a 360º view of the QA process for LLM applications, ensuring quality and reducing time to market.09 Jul 2024Readmore

Ottic is a platform designed to evaluate LLM-powered applications, enabling both tech and non-technical teams to test their LLM apps and ship reliable products faster. It offers features like visual prompt management, end-to-end test management, comprehensive LLM evaluation, and user behavior monitoring. Ottic aims to provide a 360º view of the QA process for LLM applications, ensuring quality and reducing time to market.09 Jul 2024Readmore -

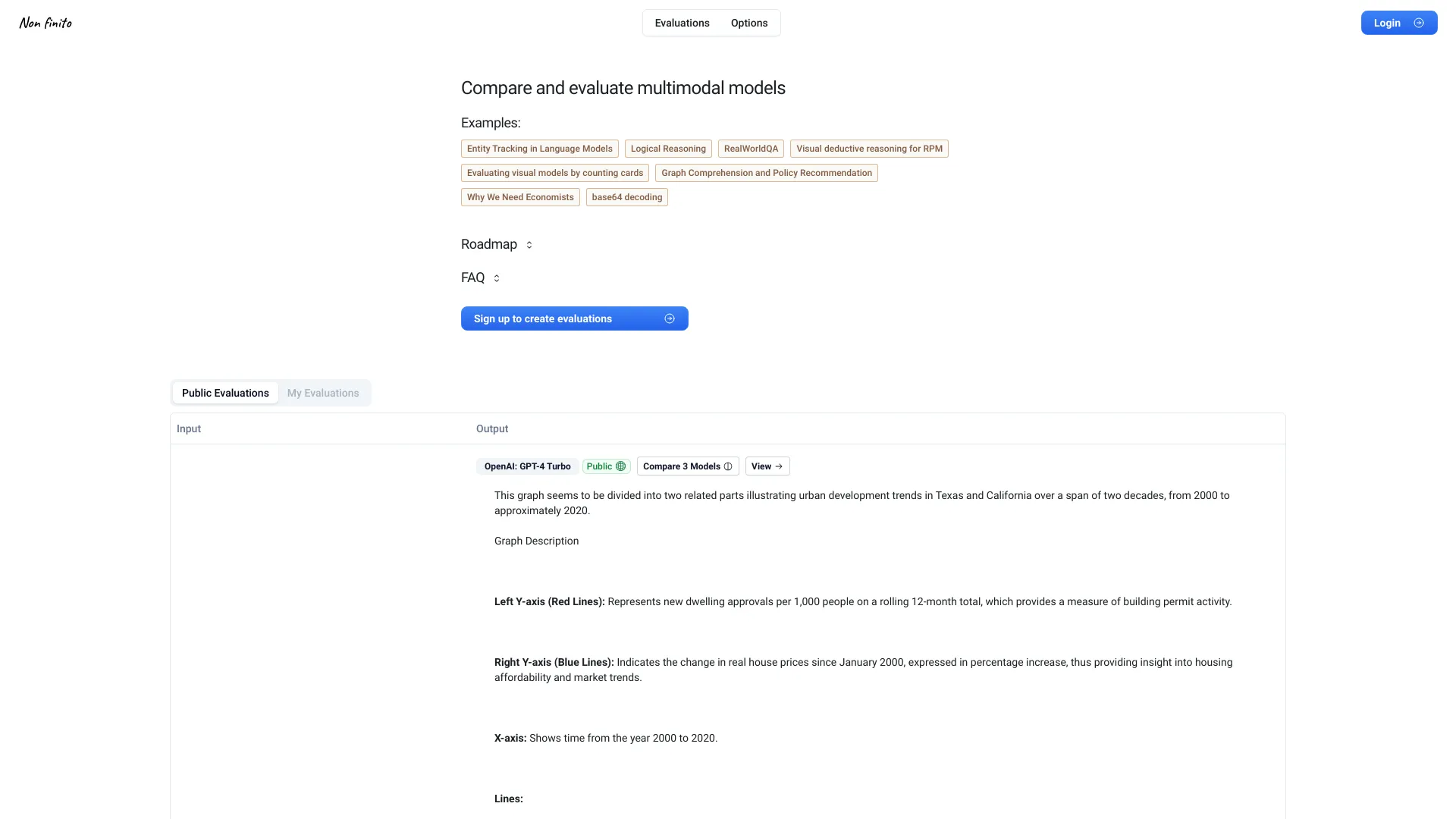

Multimodal models

Multimodal models

Non finito is a platform designed for evaluating multimodal models, with a focus on making it easy to run and share evaluations. It aims to provide tools specifically tailored for multimodal models, which are often overlooked by tools primarily focused on language models (LLMs). The platform emphasizes easy comparison of models and public sharing of evaluations.27 Apr 2024Readmore

Non finito is a platform designed for evaluating multimodal models, with a focus on making it easy to run and share evaluations. It aims to provide tools specifically tailored for multimodal models, which are often overlooked by tools primarily focused on language models (LLMs). The platform emphasizes easy comparison of models and public sharing of evaluations.27 Apr 2024Readmore