-

Prompt engineering

Prompt engineering

Trainkore is a prompting and RAG (Retrieval-Augmented Generation) platform designed to automate prompts and save costs. It offers features like auto prompt generation, model switching, evaluation, observability, and a prompt playground. It integrates with various AI providers and frameworks like Langchain and LlamaIndex.21 Oct 2024Readmore

Trainkore is a prompting and RAG (Retrieval-Augmented Generation) platform designed to automate prompts and save costs. It offers features like auto prompt generation, model switching, evaluation, observability, and a prompt playground. It integrates with various AI providers and frameworks like Langchain and LlamaIndex.21 Oct 2024Readmore -

LLM evaluation

LLM evaluation

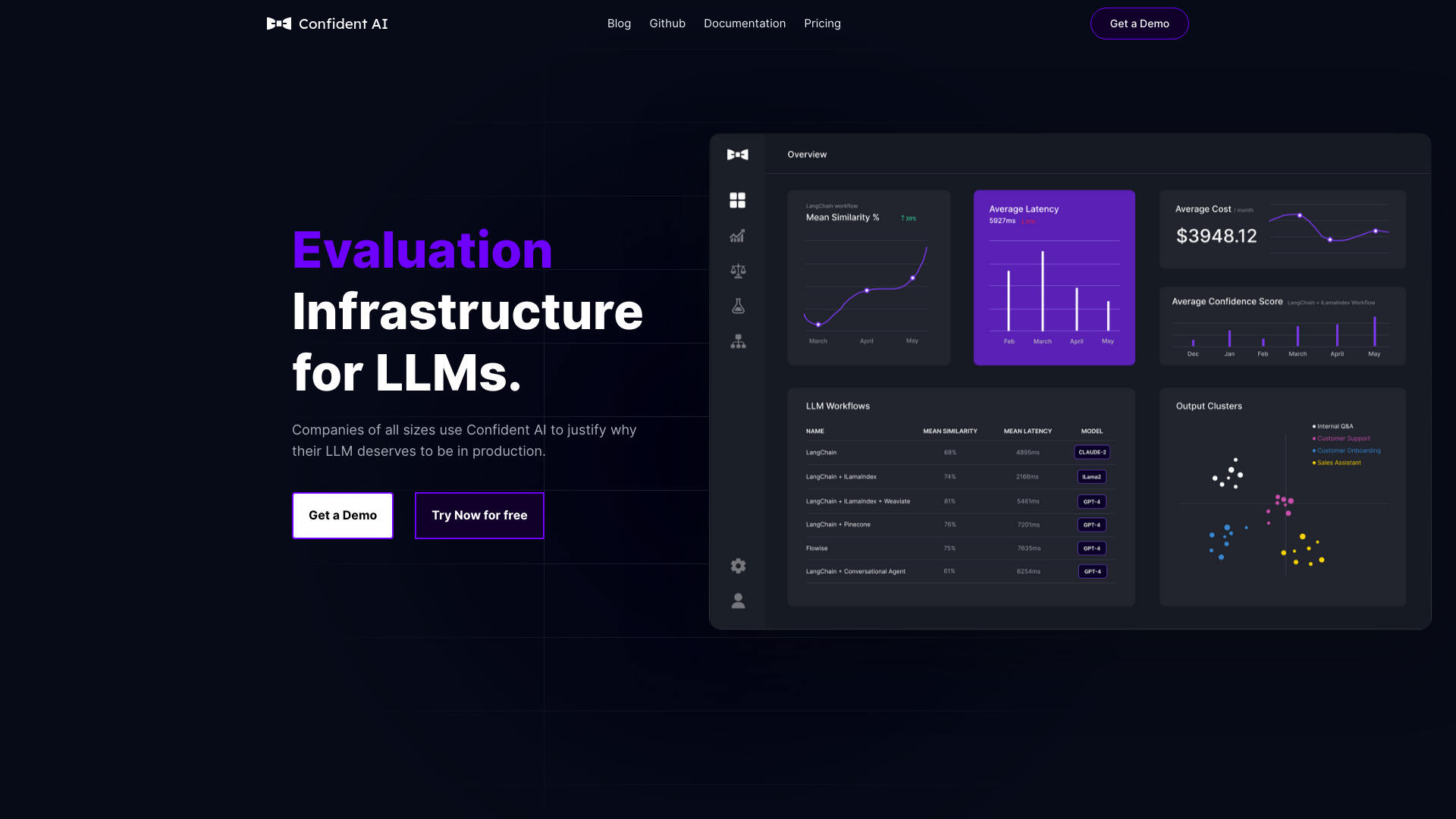

Confident AI is an all-in-one LLM evaluation platform built by the creators of DeepEval. It offers 14+ metrics to run LLM experiments, manage datasets, monitor performance, and integrate human feedback to automatically improve LLM applications. It works with DeepEval, an open-source framework, and supports any use case. Engineering teams use Confident AI to benchmark, safeguard, and improve LLM applications with best-in-class metrics and tracing. It provides an opinionated solution to curate datasets, align metrics, and automate LLM testing with tracing, helping teams save time, cut inference costs, and convince stakeholders of AI system improvements.31 Jul 2024Readmore

Confident AI is an all-in-one LLM evaluation platform built by the creators of DeepEval. It offers 14+ metrics to run LLM experiments, manage datasets, monitor performance, and integrate human feedback to automatically improve LLM applications. It works with DeepEval, an open-source framework, and supports any use case. Engineering teams use Confident AI to benchmark, safeguard, and improve LLM applications with best-in-class metrics and tracing. It provides an opinionated solution to curate datasets, align metrics, and automate LLM testing with tracing, helping teams save time, cut inference costs, and convince stakeholders of AI system improvements.31 Jul 2024Readmore -

AI evaluation

Pi Labs offers an AI-powered platform designed to automatically build evaluation systems (evals) for AI applications, particularly those involving Large Language Models (LLMs) and agents. It enables users to create custom scoring models that precisely match user feedback and prompts, ensuring highly accurate and consistent evaluation. The platform integrates seamlessly with various existing tools and provides a fast, highly accurate foundation model called Pi Scorer for comprehensive metrics, observability, and agent control across the entire AI stack.23 May 2025Readmore

Pi Labs offers an AI-powered platform designed to automatically build evaluation systems (evals) for AI applications, particularly those involving Large Language Models (LLMs) and agents. It enables users to create custom scoring models that precisely match user feedback and prompts, ensuring highly accurate and consistent evaluation. The platform integrates seamlessly with various existing tools and provides a fast, highly accurate foundation model called Pi Scorer for comprehensive metrics, observability, and agent control across the entire AI stack.23 May 2025Readmore -

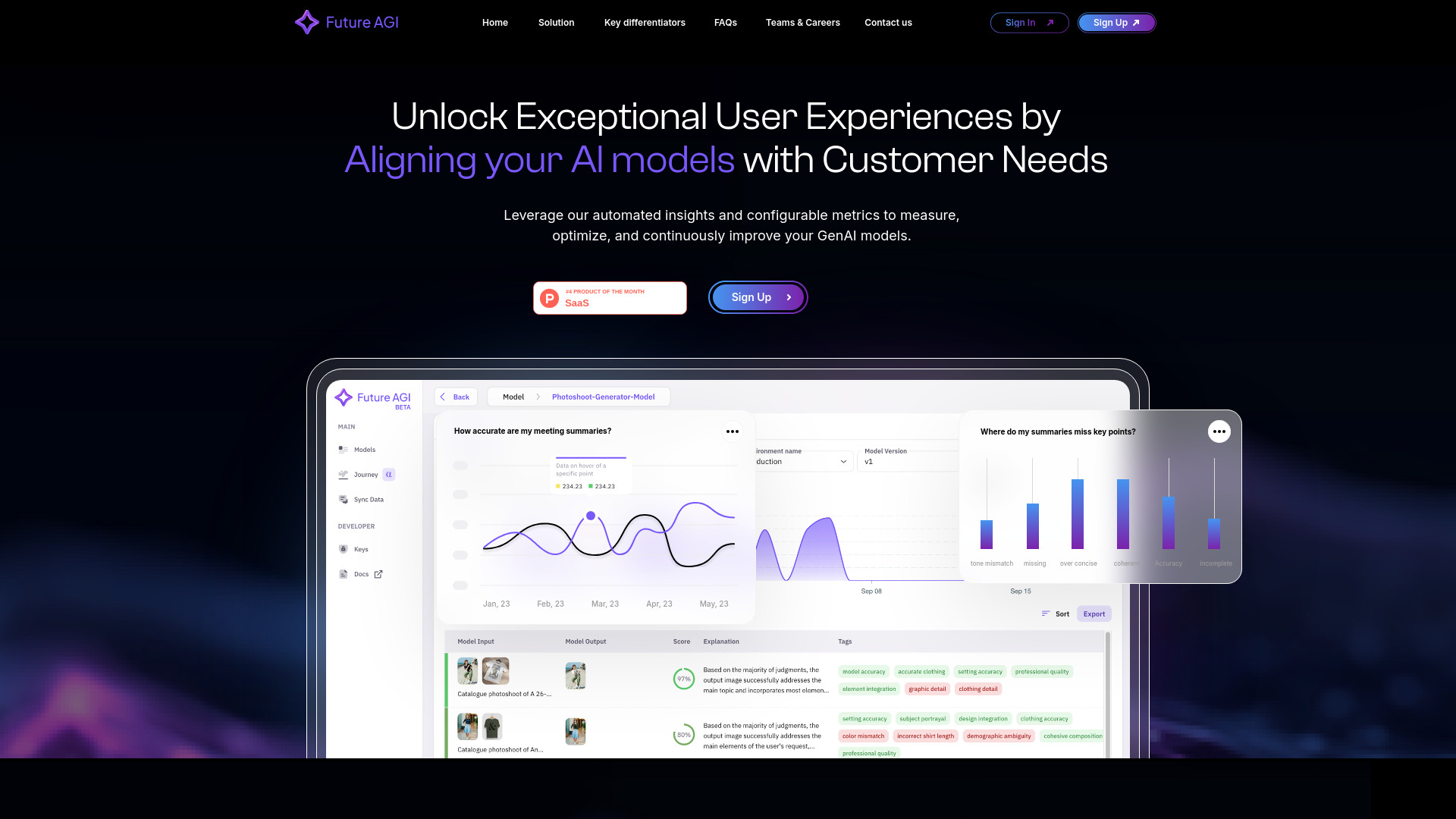

AI evaluation

AI evaluation

Future AGI develops advanced AI evaluation and optimization products, enabling automated quality assessment and performance enhancement for AI models. It offers a comprehensive platform to help enterprises achieve high accuracy in AI applications across software and hardware, replacing manual QA with Critique Agents and custom metrics.22 Nov 2024Readmore

Future AGI develops advanced AI evaluation and optimization products, enabling automated quality assessment and performance enhancement for AI models. It offers a comprehensive platform to help enterprises achieve high accuracy in AI applications across software and hardware, replacing manual QA with Critique Agents and custom metrics.22 Nov 2024Readmore -

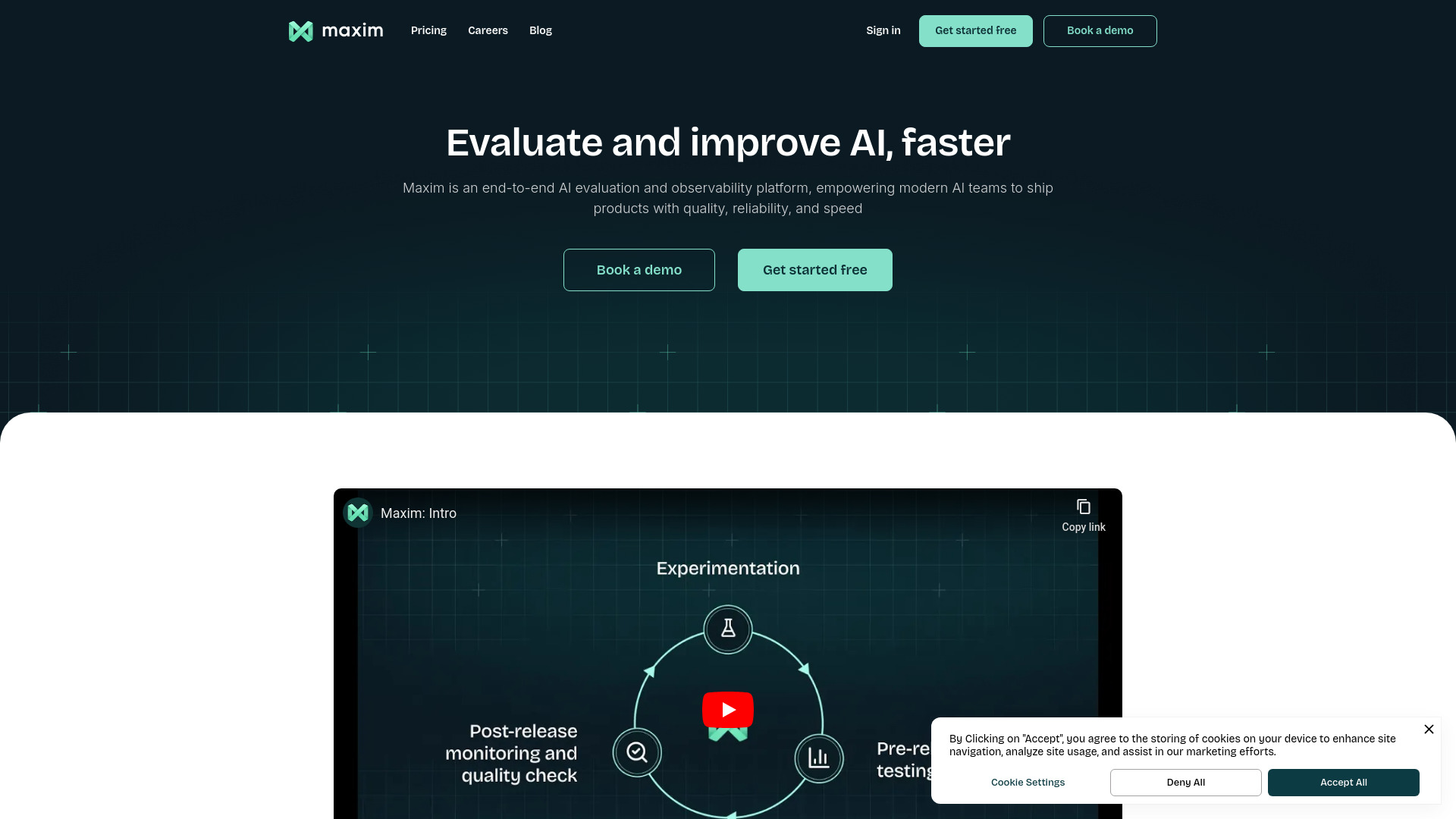

AI evaluation

AI evaluation

Maxim is an end-to-end AI evaluation & observability platform that helps you test and deploy AI apps with greater speed & confidence. Its developer stack includes tools for the full AI lifecycle: experimentation, pre-release testing, & post-release monitoring. It offers features like agent simulation and evaluation, prompt engineering tools, observability, and continuous quality monitoring. Maxim supports various AI frameworks and provides SDKs, CLI, and webhook support.01 Dec 2024Readmore

Maxim is an end-to-end AI evaluation & observability platform that helps you test and deploy AI apps with greater speed & confidence. Its developer stack includes tools for the full AI lifecycle: experimentation, pre-release testing, & post-release monitoring. It offers features like agent simulation and evaluation, prompt engineering tools, observability, and continuous quality monitoring. Maxim supports various AI frameworks and provides SDKs, CLI, and webhook support.01 Dec 2024Readmore -

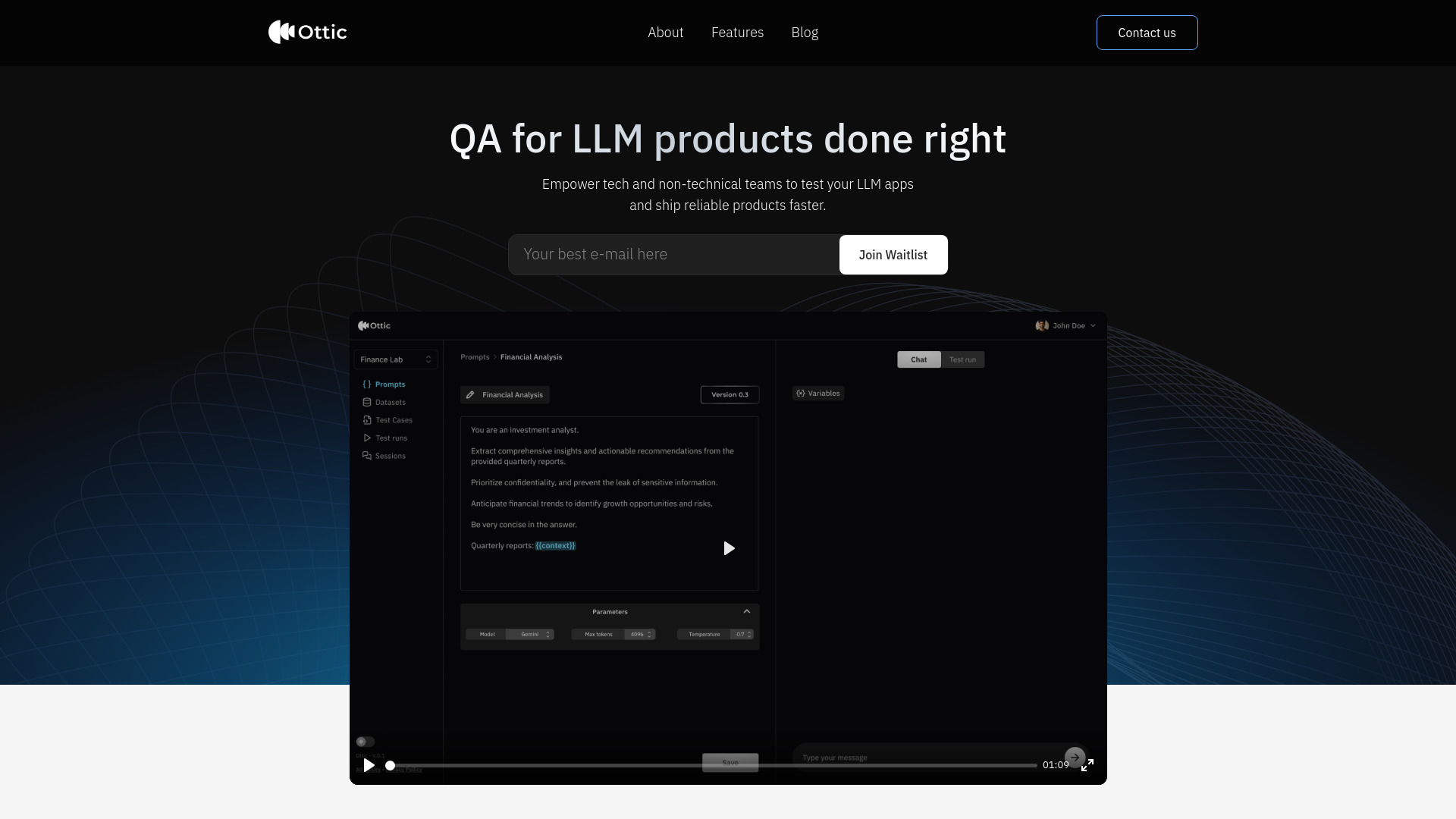

LLM evaluation

LLM evaluation

Ottic is a platform designed to evaluate LLM-powered applications, enabling both tech and non-technical teams to test their LLM apps and ship reliable products faster. It offers features like visual prompt management, end-to-end test management, comprehensive LLM evaluation, and user behavior monitoring. Ottic aims to provide a 360º view of the QA process for LLM applications, ensuring quality and reducing time to market.09 Jul 2024Readmore

Ottic is a platform designed to evaluate LLM-powered applications, enabling both tech and non-technical teams to test their LLM apps and ship reliable products faster. It offers features like visual prompt management, end-to-end test management, comprehensive LLM evaluation, and user behavior monitoring. Ottic aims to provide a 360º view of the QA process for LLM applications, ensuring quality and reducing time to market.09 Jul 2024Readmore