LLM monitoring

LLM monitoring

Keywords AI is an LLM API that offers a cost-effective alternative to GPT-4 without compromising on quality. It is also a leading LLM monitoring platform for AI startups, designed to easily monitor and improve LLM applications with just 2 lines of code. It helps debug and ship reliable AI features faster, essentially acting as Datadog for LLM applications.

12 Oct 2023

Readmore

AI Ops

AI Ops

Elixir is an AI Ops & QA platform designed for multimodal, audio-first experiences. It helps ensure the reliability of voice agents by simulating realistic test calls, automatically analyzing conversations, and identifying mistakes. The platform provides debugging tools with audio snippets, call transcripts, and LLM traces, all in one place.

20 Aug 2024

Readmore

LLM evaluation

LLM evaluation

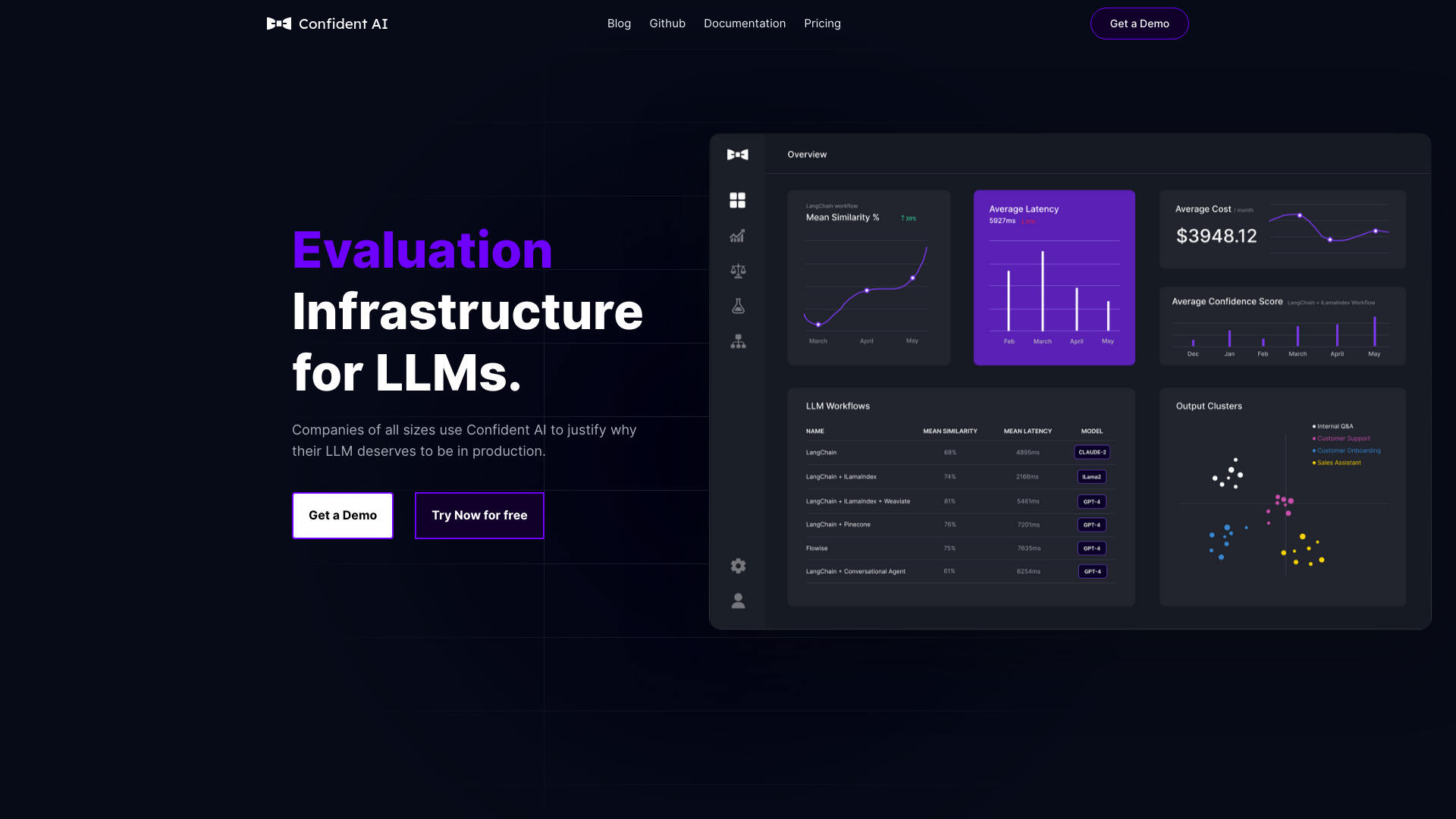

Confident AI is an all-in-one LLM evaluation platform built by the creators of DeepEval. It offers 14+ metrics to run LLM experiments, manage datasets, monitor performance, and integrate human feedback to automatically improve LLM applications. It works with DeepEval, an open-source framework, and supports any use case. Engineering teams use Confident AI to benchmark, safeguard, and improve LLM applications with best-in-class metrics and tracing. It provides an opinionated solution to curate datasets, align metrics, and automate LLM testing with tracing, helping teams save time, cut inference costs, and convince stakeholders of AI system improvements.

31 Jul 2024

Readmore

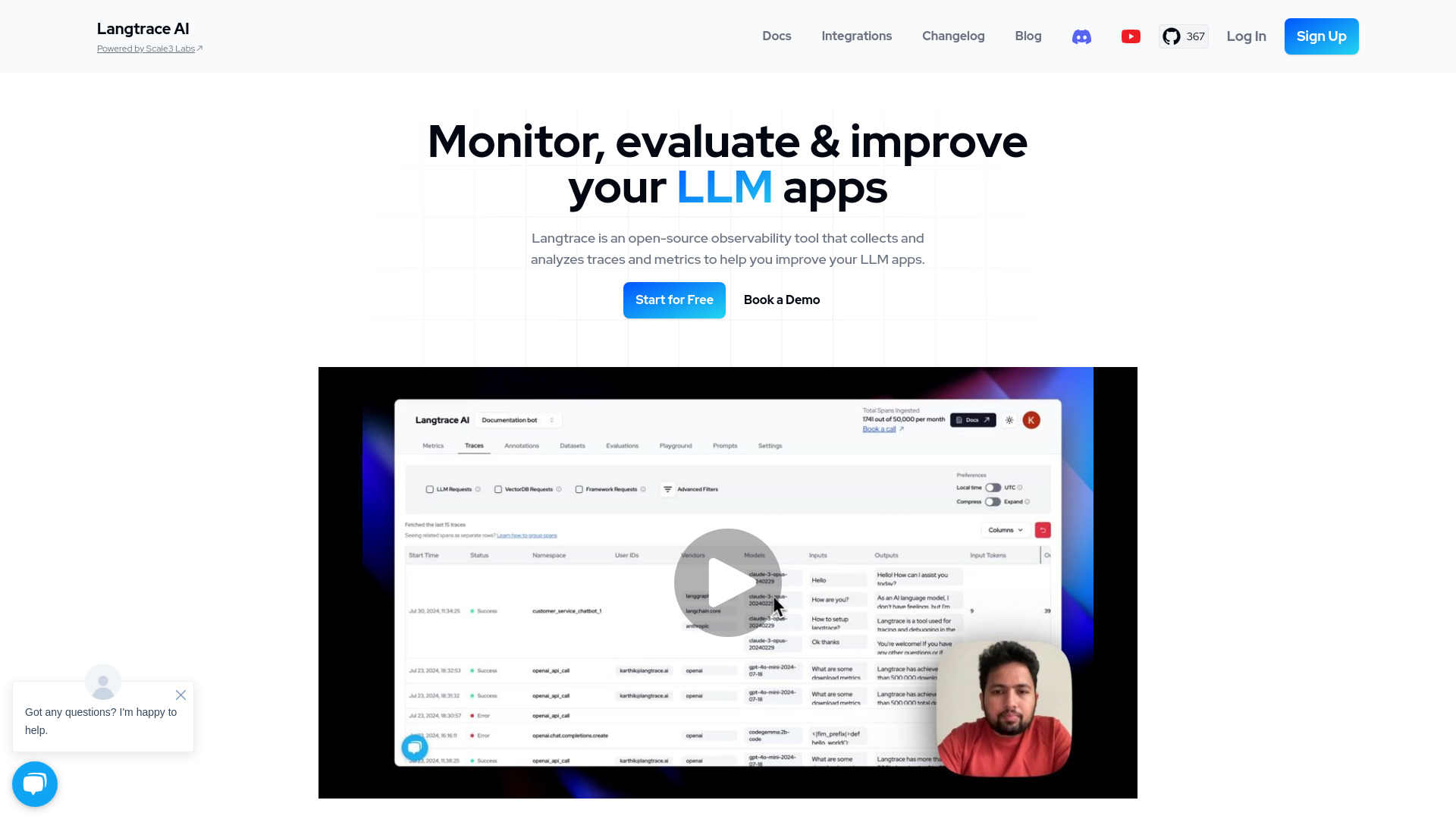

LLM observability

LLM observability

Langtrace AI is an open-source observability tool that helps monitor, evaluate, and improve your LLM apps. With end-to-end visibility, advanced security, and seamless integration, Langtrace ensures you can optimize performance and build with confidence. It is an Open Source Observability and Evaluations Platform for AI Agents. Langtrace supports CrewAI, DSPy, LlamaIndex & Langchain. It also supports a wide range of LLM providers and VectorDBs out of the box.

10 Aug 2024

Readmore