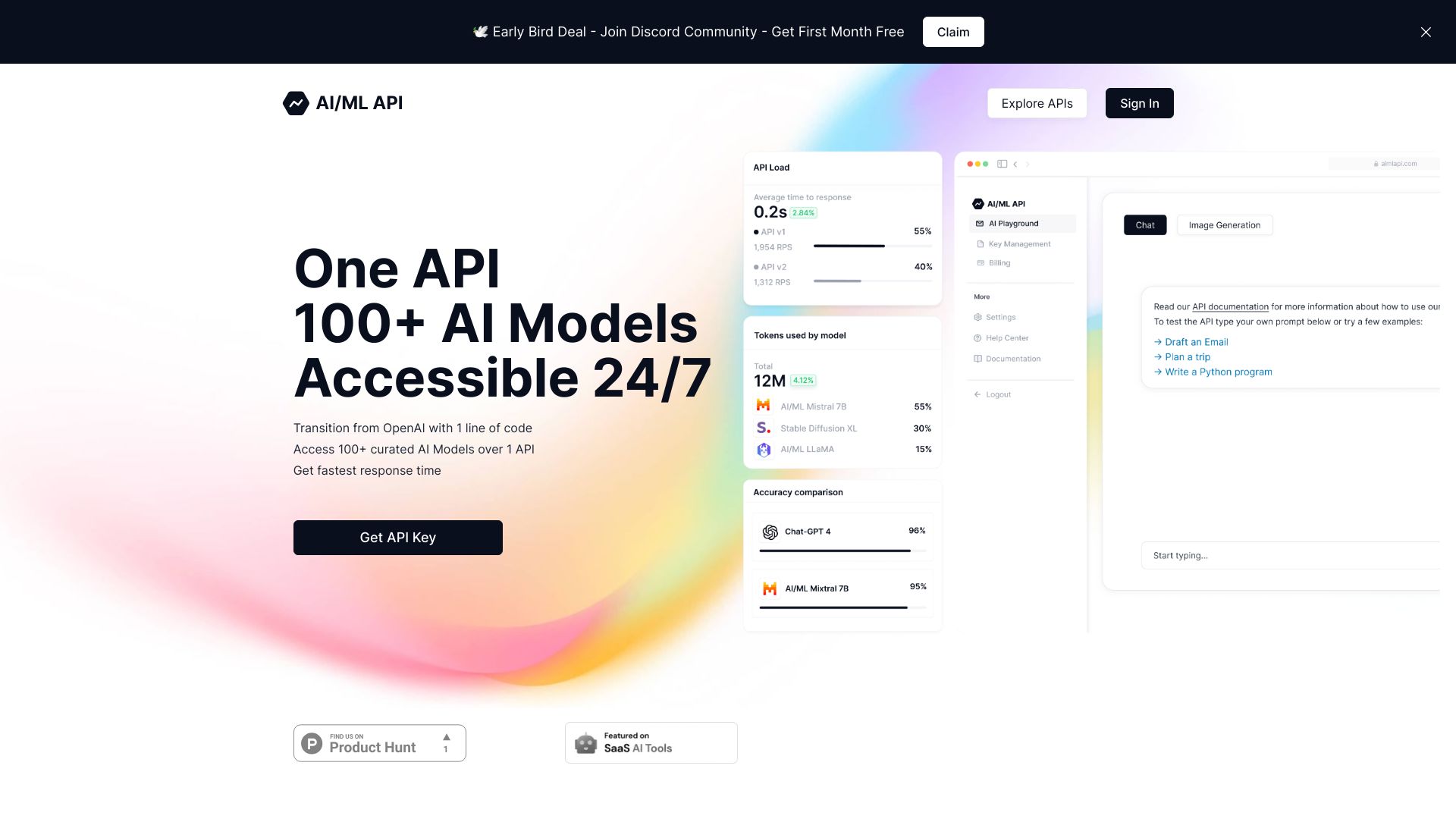

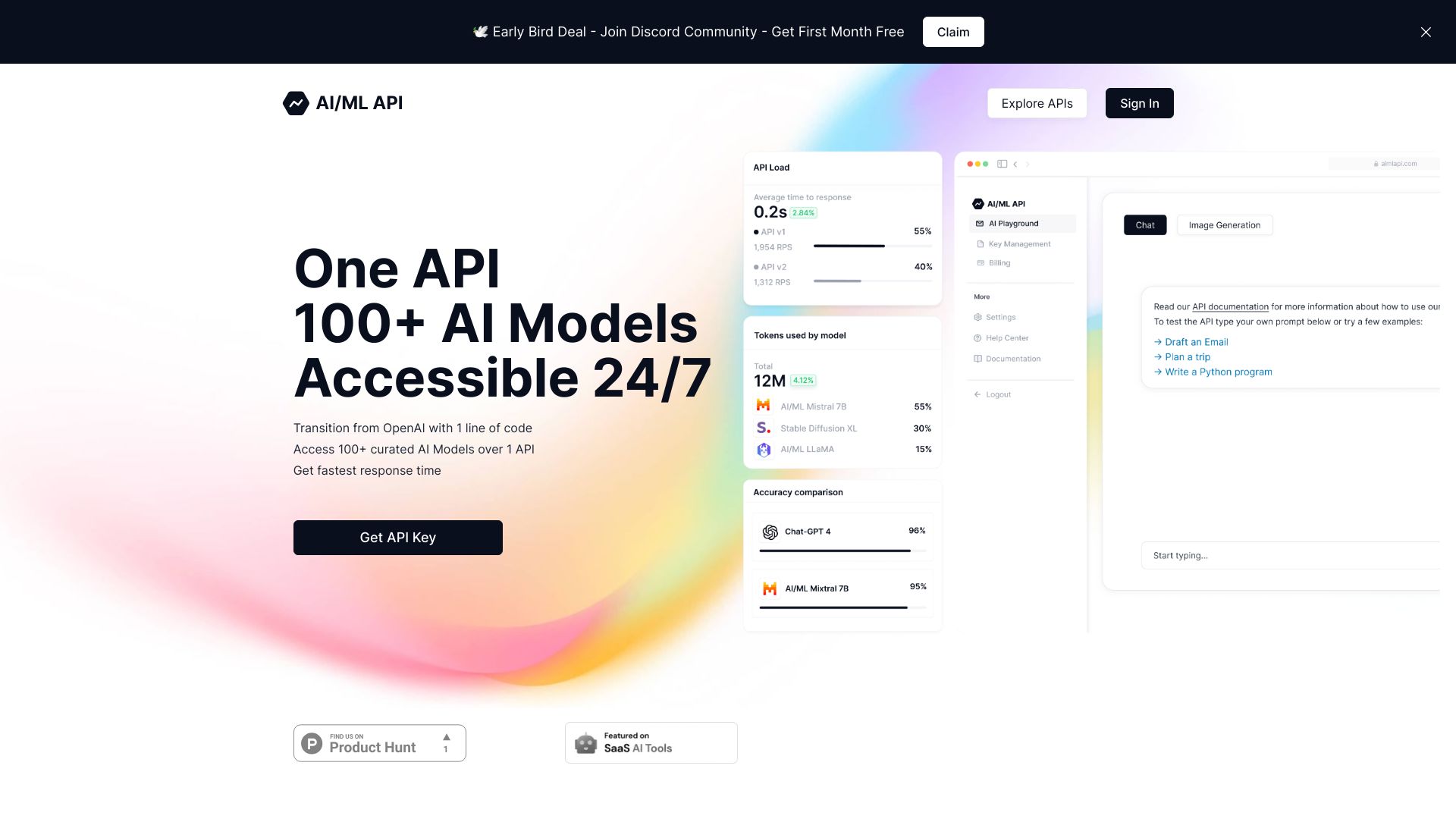

Large Language Models (LLMs)

Large Language Models (LLMs)

-

Large Language Models (LLMs)

Large Language Models (LLMs)

-

AI Response Generator

AI Response Generator

-

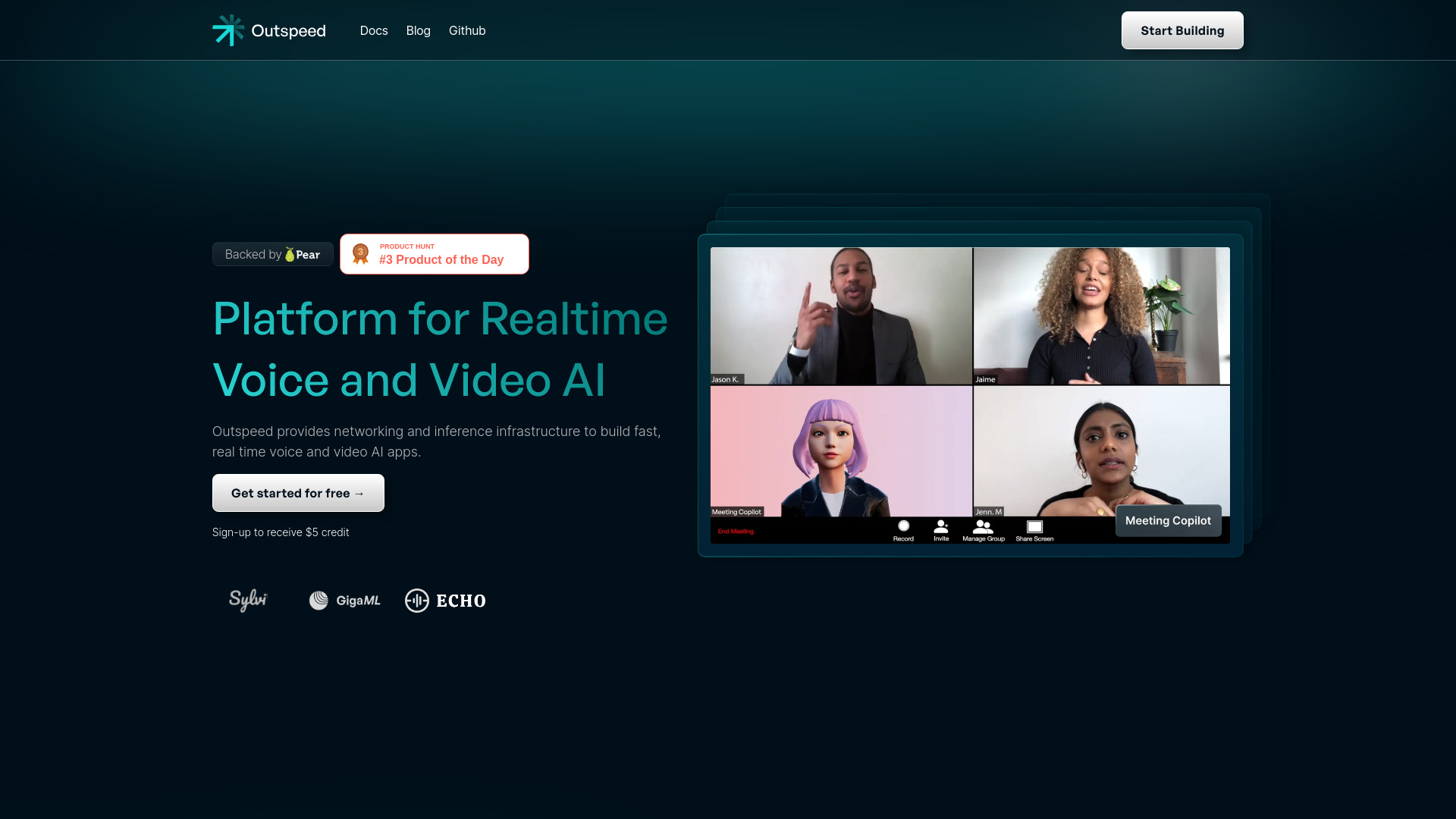

AI Voice Chat Generator

AI Voice Chat Generator

-

AI Voice Assistants

AI Voice Assistants

-

AI Youtube Summary

AI Youtube Summary