Introduction

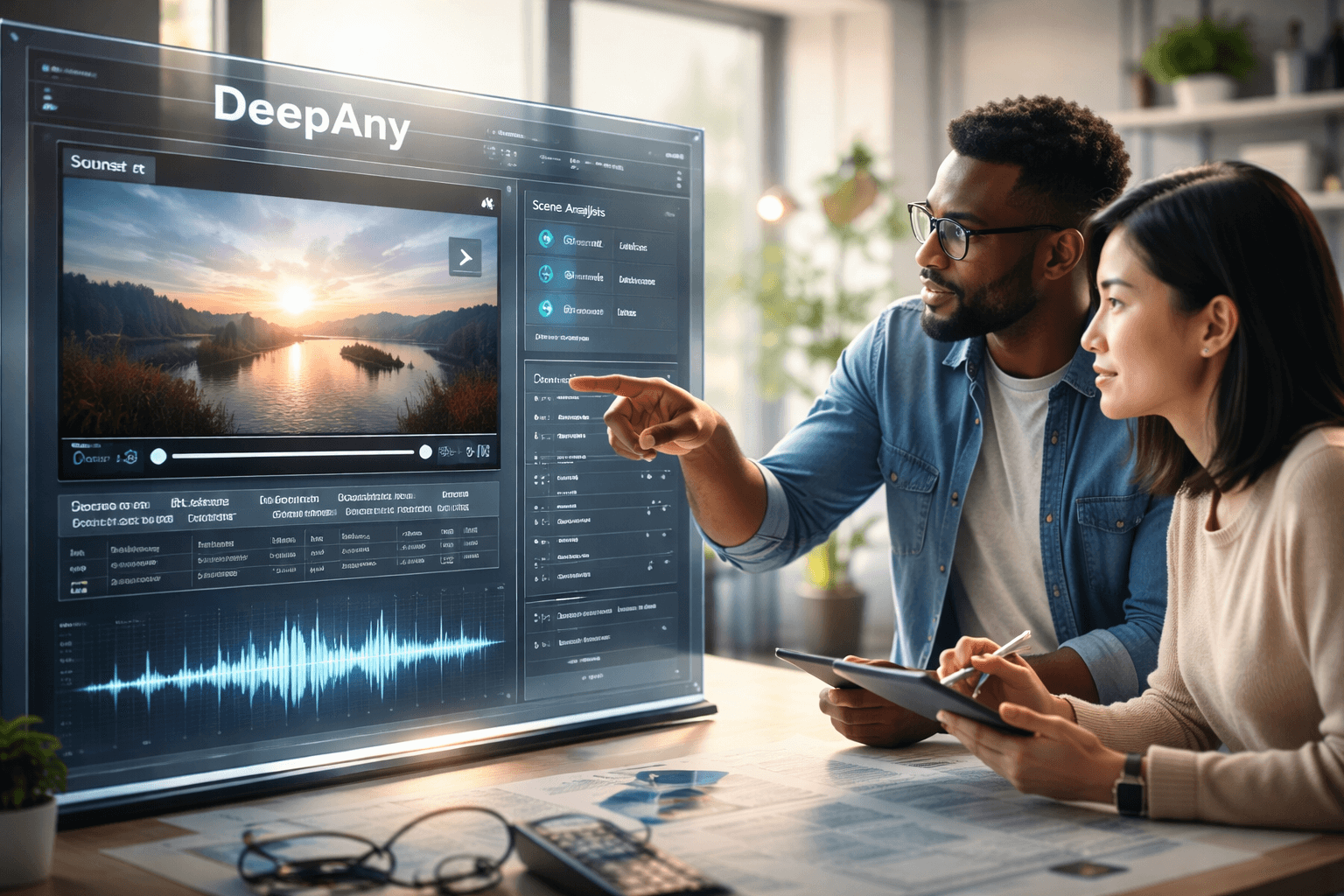

The trajectory of artificial intelligence has moved rapidly from text-based large language models (LLMs) to systems that can see, hear, and speak. However, true versatility—the ability to process and reason across different modalities simultaneously without friction—has remained a complex engineering challenge. DeepAny enters this space as a powerful solution, aiming to bridge the gap between disparate data types through a unified framework.

As the demand for more sophisticated AI interactions grows, tools that can natively understand the relationship between video, audio, text, and static imagery are becoming essential infrastructure. DeepAny positions itself at the forefront of this shift, offering a robust architecture designed not just to process these inputs, but to reason about them in a way that mimics human perception. For founders and developers building the next generation of AI-native applications, understanding the capabilities of DeepAny is crucial.

What DeepAny Does

At its core, DeepAny is a unified multimodal artificial intelligence system. Unlike traditional models that rely on separate encoders for different data types (one for vision, one for text, one for audio), DeepAny utilizes a streamlined architecture designed to handle "any-to-any" processing tasks. This means it can accept inputs in various formats—whether it be a complex video clip, a spoken command, or a textual query—and process them within a single inference cycle.

The platform is designed to eliminate the fragmentation often found in AI pipelines. Instead of stitching together multiple specialized models, DeepAny provides a cohesive engine that understands the semantic connections between modalities. This allows for high-fidelity understanding, such as analyzing the sentiment of a voiceover while simultaneously interpreting the visual context of a video frame.

Key Features and Capabilities

- Unified Multimodal Architecture: DeepAny employs a single, integrated framework to process text, image, video, and audio, reducing the computational overhead associated with managing multiple disjointed models.

- High-Fidelity Video Understanding: The model excels at temporal analysis, allowing it to understand cause-and-effect relationships within video content rather than just analyzing static keyframes.

- Cross-Modal Reasoning: DeepAny allows for complex reasoning tasks, such as answering questions about a video based on audio cues, or generating textual descriptions that accurately reflect visual nuances.

- Scalable Integration: Designed for developers, the framework supports robust APIs and integration points, making it feasible to embed advanced multimodal capabilities into existing software ecosystems.

- Zero-Shot Capabilities: The model demonstrates strong performance on tasks it hasn't been explicitly trained for, showcasing deep generalization abilities across different media types.

Use Cases and Practical Applications

The versatility of DeepAny opens up a wide array of operational applications for startups and enterprises:

Advanced Media Search and Retrieval

Media companies can use DeepAny to index vast libraries of video and audio content. Users can search for specific moments using natural language (e.g., "Find the clip where the car drives off the cliff during sunset"), significantly streamlining post-production and archival workflows.

Autonomous Systems and Robotics

Robotics startups can leverage the model's visual and audio reasoning capabilities to improve environmental perception. A robot could understand verbal commands while simultaneously interpreting visual obstacles, leading to safer and more responsive navigation.

Educational Tech and Accessibility

DeepAny can automatically generate rich, descriptive captions for video content or convert visual diagrams into spoken explanations. This enhances accessibility for visually impaired users and creates more immersive learning experiences for students.

Automated Content Moderation

Trust and safety teams can deploy the model to analyze user-generated content across all formats. By understanding context in both audio and video simultaneously, DeepAny can detect nuances in harmful content that unimodal text filters would miss.

Why DeepAny Stands Out

The primary differentiator for DeepAny is its commitment to a truly unified architecture. Many competitors in the multimodal space still rely on "gluing" together a vision encoder (like CLIP) with a language model (like Llama). While effective, this approach often results in a loss of nuance, particularly in how the modalities interact.

DeepAny’s approach suggests a tighter integration where the boundaries between seeing, hearing, and reading are blurred within the model's internal representation. This results in faster inference times for complex tasks and higher accuracy in scenarios requiring deep contextual understanding. For investors and strategic decision-makers, DeepAny represents a move toward more efficient, general-purpose AI models that can handle the complexity of the real world without requiring a massive stack of specialized tools.

Conclusion

DeepAny illustrates the rapid maturity of multimodal AI. by moving beyond simple text processing into a world of unified sensory understanding, it provides the building blocks for applications that are more intuitive, capable, and aligned with human interaction. As the AI landscape shifts from chat-bots to "do-engines" that can perceive and act, frameworks like DeepAny will likely serve as the foundational layer for future innovation.

Share your startup idea on StartupIdeasAI.com to get discovered by founders, investors, and innovators.

Frequently Asked Questions

What makes DeepAny different from models like GPT-4?

While models like GPT-4 are multimodal, DeepAny specifically emphasizes a unified architecture optimized for seamless "any-to-any" reasoning across video, audio, image, and text, often targeting more efficient integration for specific multimodal tasks.

Can DeepAny process video and audio simultaneously?

Yes, DeepAny is designed to understand and reason across video and audio inputs concurrently, making it highly effective for analyzing multimedia content where context is split between visual and auditory cues.

Is DeepAny suitable for commercial application development?

DeepAny is built with scalability in mind, offering capabilities that are relevant for enterprises building search engines, content moderation tools, and autonomous systems.

Does DeepAny support real-time processing?

Performance depends on the specific implementation and hardware, but its unified architecture is designed to be more efficient than pipeline-based approaches, facilitating near real-time applications.

What industries benefit most from DeepAny?

Sectors heavily reliant on multimedia data, such as media and entertainment, robotics, education technology, and security/surveillance, stand to gain the most from its capabilities.